A/B Testing in the Ads Manager UI

Starting January 2023, we’re launching access to self-serve A/B testing in the X Ads Manager UI.

A/B testing is an experimentation framework that allows you to test performance hypotheses by creating randomized, mutually exclusive audiences that are exposed to a particular test variable.

We offer self-serve access to our A/B testing tool to enable our partners to gather learnings and fine-tune their campaign strategies with data that is statistically significant.

We support A/B testing of creative assets only:

- Images

Videos

Text

CTAs

Important: A/B testing is also available to marketing partners via the Ads API. Please refer to this resource for more information.

Use cases

A/B testing is most often used to support:

Optimization use cases for performance-focused advertisers who want to understand what works best on X in order to optimize their campaigns.

Learning use cases for branding-focused advertisers who want to use learnings to inform their creative strategy.

Example:

I run a Pet Store and want to understand which creative drives the best click-through-rate (CTR) % for my brand. My creative assets feature Dogs, Cats, and Rabbits.

Split Testing Variable: Creative

Ad Groups: 3 (with one ad group ID per group).

Supported functionality

Objectives

All objectives are supported, except Takeovers. Dynamic Product Ads are not supported if A/B Testing is toggled on.

Variables available for testing

Creative

*(1) One A/B test can have up to 5 mutually exclusive buckets (i.e. individual ad groups per A/B test).

Budgets

Budgets will be set at the Ad group level for more granular control. Campaign Budget Optimization (CBO) will not be supported with A/B testing.

Reporting

You have access to the same media metrics and conversion data normally available in Ads Manager.

Additionally, you can access A/B test-specific information like:

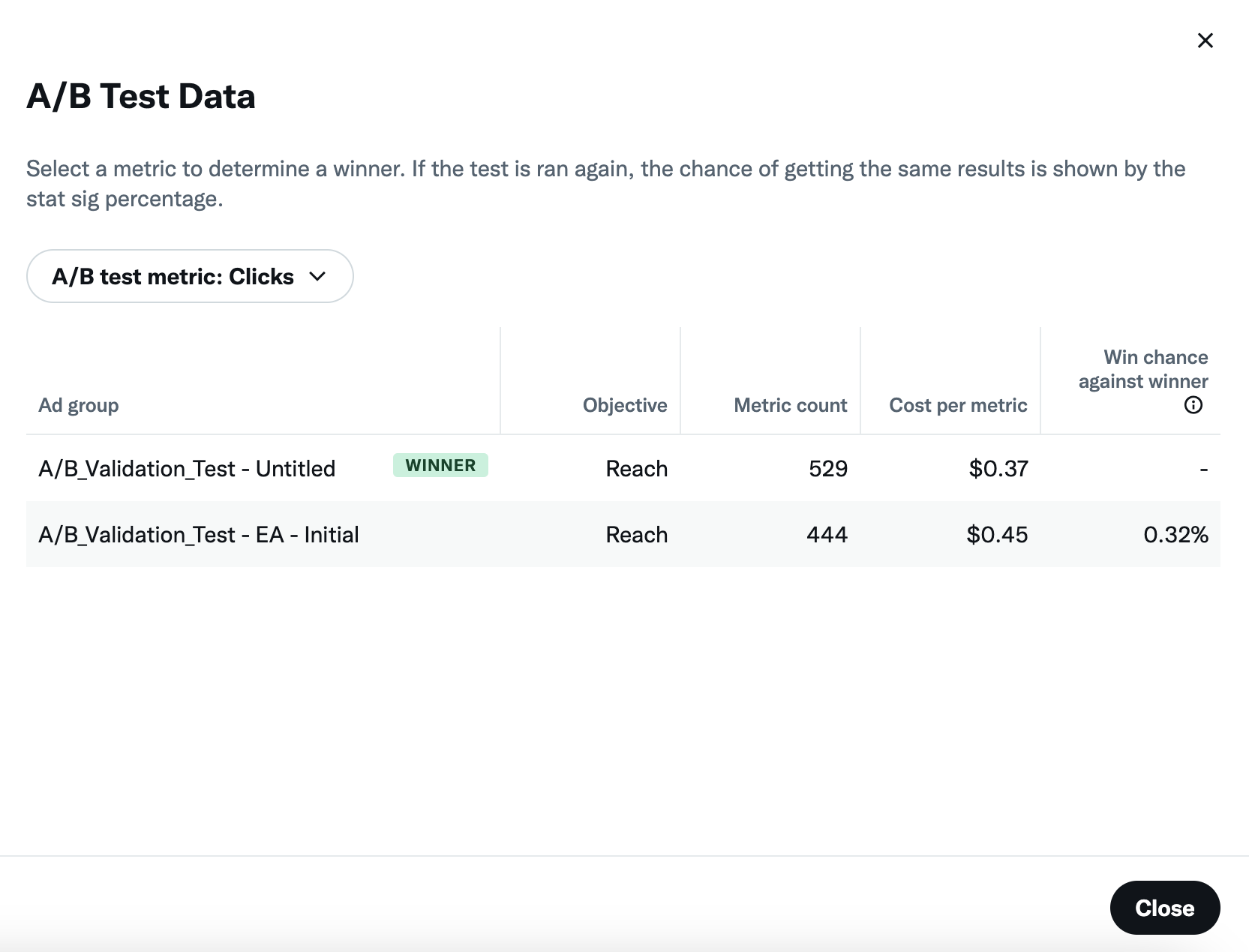

Winning test cell

Volume of events driven

Cost per event

Win probability if the test was run again

You can access this information by:

1. Selecting the icon next to the campaign in which you ran your A/B test:

2. Selecting “A/B Test Data"

3. Selecting your success metric

4. The winning cell from your split test will be labeled:

Best practices

A/B Tests should be executed with an ‘all else equal’ principle. This means that if the goal of the test is to compare creative variables across ad groups, the audiences and other setup parameters should mirror each other (‘all else equal’) to provide clean, viable learnings.

It’s important to note that changing one or more variables - eg: making the age difference between ad groups in an A/B test - would invalidate the A/B Test results due to the variations across audiences.

When setting up your A/B test, Ads Manager will display warnings denoting the inclusion of multiple variables between cells (though will not prevent this usage).

Setup

To use the new self-serve A/B testing tool in the X Ads UI…

Navigate to ads.x.com

In the top right corner, select “Create Campaign”

Select your desired Objective

Click “Next”

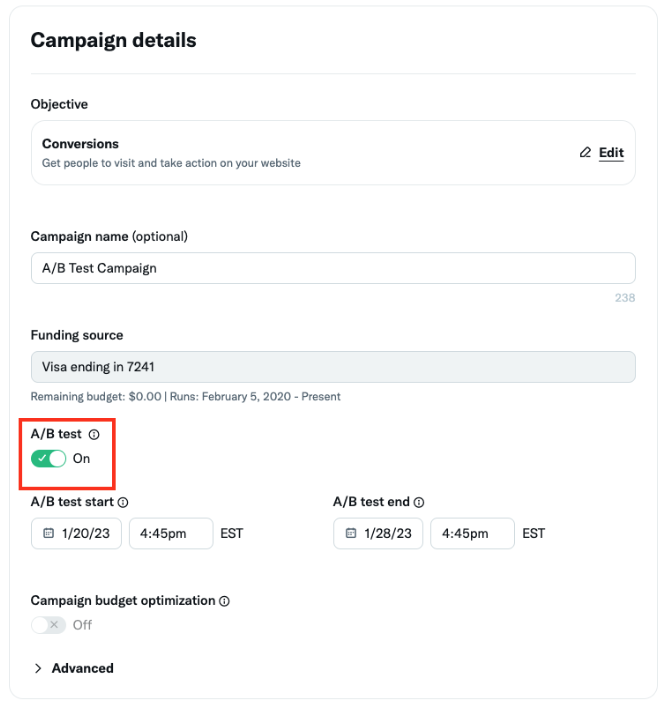

In the Campaign form, under “Campaign details”, toggle “A/B test” to ‘ON

Click “Next”

Input relevant ad group information under “Ad group details”

Click “Next”

Create at minimum 2 ads. These will be the variables tested in your A/B test.

Click “Next”

Review campaign details

Click “Launch campaign”

Reporting

To review the outcome of your A/B test...

1. Navigate to Ads Manager

2. Find the campaign in which you ran your A/B test. Then select the icon next to the campaign:

3. Select “A/B Test Data"

4. Select your success metric

5. The winning cell from your split test will be labeled:

Interpreting Results

The cell with the lowest cost per selected metric will be labeled as the winning cell.

The win chance metric associated with a losing cell indicates the likelihood that that cell will win against the winning cell by the end of the A/B test. Ideally, you want as low of a Win Chance as possible for losing cells to have the highest degree of confidence that the results of the test are not due to chance alone.

Frequently asked questions

No, once a campaign is set up (either draft, live, or in the past), the A/B test toggle cannot be edited. |

Yes. Audiences for each cell will remain mutually exclusive until the end of the campaign flight. |

We recommend waiting until the end of your campaign flight to analyze results. However, you can also edit the end date to end the campaign early, and create another campaign. If you still have concerns, connect with your account manager. |

At this time, we only support A/B tests with creative as the test variable. |

We recommend at least 2 weeks.

No. However, ensuring a high volume of events will increase the likelihood of achieving statistically significant results.

Up to 5 ad groups, and each of those ad groups can have up to 5 ads. Reporting is only available at the ad group level.

A/B testing must be toggled on before the campaign is launched, even in draft. To create an A/B test you must create a new campaign.

A/B Test Data is available during the campaign flight and after the flight has concluded, allowing you to make optimization decisions mid-flight rather than waiting for the end.

X uses Bayesian A/B testing.

X uses Bayesian A/B testing (BayesAB) and therefore statistical significance is not relevant. Instead, the output of a Bayesian algorithm is win chance, which is reported in the A/B Test Data menu in Ads Manager.

Win Chance is the output of a Bayesian A/B test that measures the probability that a given cell will win relative to the current winning cell. A lower Win Chance indicates that we can be confident that the winning cell’s success is not due to chance alone.

Win Chance of a winning cell is only relevant when compared with one losing cell. In a test where there are multiple losing cells (i.e. a test of 3 or more total cells) it is impossible to report on the win chance of the winning cell compared to all other cells simultaneously. By reporting on the win chance of losing cells, we can report the relative power of all losing cells in the test relative to the winning cell.

Ready to get started?